Using Monte Carlo Simulation to Understand the Sensitivity of a Complex System

Bastian Solutions | 23 November 2011

During the design process of any system, it is desirable to know how the possible range of input values will affect the system output. One back-of-the-envelope method is to assume the amount of resources the system will use, err on the high side, and protect yourself against failure. However, what if you are designing a race car component? Would it be necessary to always be conservative? If you are, your competitor will be faster, more nimble, and will speed right by your driver.

Understanding your risks--or the range of inputs that will play a role in your system design--is the first step in creating a mitigation strategy. In order to provide a tangible example of input risks, I decided to conduct an experiment on a toy robot I purchased for a hobby project. Provide me the liberty to say that this experiment is analogous to a client that would like to meet a certain throughput for a robotic mixed-case, palletizing operation. With regards to the toy robot or the palletizing operation, the quick answer for determining throughput is to provide an estimated average time to pick a product and place it; but in the real world, averages can be a dangerous measurement on which to base your decisions.

There are different SKU throughput requirements based on time of day, seasonality, location, and many other factors that might change the frequency of a product being picked. So there is a risk factor associated with throughput based on what you don't know. Instead of using averages and succumbing to unknowns, you could use a Monte Carlo simulation to provide bounds on your inputs.

For example, you might not know how many Go-GURTs you will sell in the 3rd quarter, but you might be able to define a 95% confidence interval and assume a distribution that qualitatively identifies the results you suspect based on you previous experiences. In my experiment, I used the robotic arm to pick up three pieces from a 3D jigsaw puzzle. There were blue, green, and yellow pieces randomly oriented at the same location from the robot. Then I would articulate the robot with the controller--one button for each axis, and one more for the hand. It was difficult to move more than one axis at a time, but after my learning curve flattened the average cycle time, I began taking data.

I quickly found out there were errors in my judgment that could drastically affect my cycle time (i.e. I would bungle the grab for a part, and it would slide away from the robot). My initial thought was to reject this trial, but then I changed my mind; this type of anomaly happens all of the time in the real world and would need to be represented in my Monte Carlo model.

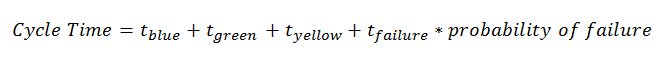

In a robotic palletizing system, there can be box flaps missing, vacuum cups that my need to be replaced, human error, maintenance downtime, and other factors that affect throughput, and I could just add that as a "register" that toggles between "0" and "1" in my model. In summary, if you assume that total time to provide one full palletizing cycle is: And you were to provide some anomaly events that add time to your throughput, you could provide a distribution for your throughput that includes your noisy factors, such as human error, system downtime, etc. After you set up the model, you can define distributions for each of your inputs.

In this simulation, I added a minimum, average, and maximum into the triangular distribution. The values for minimum were added to the 10% confidence level and the maximum was added to the 90% confidence level. The mean was at the peak of the triangle. Once the model is run, (in this case for 10,000 iterations) you can see the distribution of your output. These inputs resulted in a 90% confidence interval of 61.1 -94.5 seconds. The average for my test runs was 85.75 seconds, which fell within my confidence interval, but the distribution really tells a better story about how much the output can vary.

In summary, Monte Carlo methods are a way to use engineering insight and more qualitative assessments of your inputs to define a quantitative output. In reality, all of the models that you create will have flaws. However, the Monte Carlo method provides an entire topography of results, and you can understand how your system performs without hand cranking hundreds of what-ifs in a normal spreadsheet.

In summary, Monte Carlo methods are a way to use engineering insight and more qualitative assessments of your inputs to define a quantitative output. In reality, all of the models that you create will have flaws. However, the Monte Carlo method provides an entire topography of results, and you can understand how your system performs without hand cranking hundreds of what-ifs in a normal spreadsheet.

If you consider using sensitivity as part of your deliverables to internal and external customers, it takes a new paradigm to admit that you have a range of values in your inputs. But this admission can be the starting point for a conversation amongst stakeholders in a project to help everyone understand where the risk factors are and can spur the team to begin to exploit the power of the Monte Carlo model.

Bastian Solutions, a Toyota Advanced Logistics company.

We are a trusted supply chain integration partner committed to providing our clients a competitive advantage by designing and delivering world-class distribution and production solutions.

Our people are the foundation of this commitment. Our collaborative culture promotes integrity, inclusion, and innovation providing opportunities to learn, grow, and make an impact. Since 1952, Bastian Solutions has grown from a small Midwest company into a global corporation with over 20 U.S. offices as well as international offices in Brazil, Canada, India, and Mexico.

Comments

Joe Montgomery says:

8/28/2018 10:08 AM

This is a great post! I think it's interesting though, judging by your graph there, you must of played with this little robot for dozens or hundreds of trials, that must have taken some time! I imagine you improved quite a bit from your first try!

wow cheap says:

8/28/2018 10:08 AM

Hello, just wanted to tell you, I liked this article. It was practical. Keep on posting!

pang says:

8/28/2018 10:08 AM

That's a good post. I like it, thank you very much.

Leave a Reply

Your email address will not be published.

Comment

Thank you for your comment.